1 Langevin Dynamics

NOTEHow to sample from a distribution The Langevin dynamics for sampling from a known distribution is an iterative procedure for

where is the step size which users can control, and is white noise.

- Without the noise term, Langevin dynamics is gradient descent.

The intuition is that if we want to sample from a distribution, certainly the “optimal” location for is where is maximized (seeing the peak as a representation of a distribution). So the goal of sampling is equivalent to solving the optimization

distribution <=> peak <=> optimal solution

WARNINGLangevin dynamics is stochastic gradient descent.

- We do stochastic gradient descent since we want to sample from a distribution, instead of solving the optimization problem.

2 (Stein’s) Score Function

The second component of the Langevin dynamics equation has a formal name known as the Stein’s score function, denoted by

The way to understand the score function is to remember that it is the gradient with respect to the data For any high-dimensional distribution , the gradient will give us vector field

Geometric Interpretations of the Score Function:

- The magnitude of the vectors are the strongest at places where the change of is the biggest. Therefore, in regions where is close to the peak will be mostly very weak gradient.

- The vector field indicates how a data point should travel in the contour map.

- In physics, the score function is equivalent to the “drift”. This name suggests how the diffusion particles should flow to the lowest energy state.

3 Score Matching Techniques

Note that since the distribution is not known, we need some methods to approximate it.

Explicit Score-Matching

Suppose that we are given a dataset The solution people came up with is to consider the classical kernel density estimation by defining a distribution

where is just some hyperparameter for the kernel function , and is the -th sample in the training set.

- The sum of all these individual kernels gives us the overall kernel density estimate

- The idea is similar to Gaussian Mixture Model.

Since is an approximation to which is never accessible, we can learn s based on This leads to the following definition of the a loss function which can be used to train a network.

WARNINGThe explicit score matching loss is

By substituting the kernel density estimation, we can show that the loss is

Once we train the network s, we can replace it in the Langevin dynamics equation to obtain the recursion:

Issue:

- The kernel density estimation is a fairly poor non-parameter estimation of the true distribution.

- When we have limited number of samples and the samples live in a high dimensional space, the kernel density estimation performance can be poor.

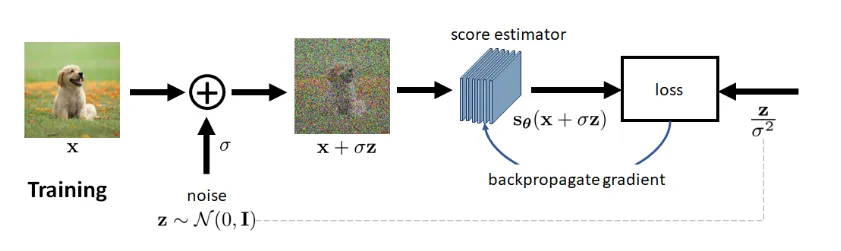

Denoising Score Matching

In DSM, the loss function is defined as follows.

The idea comes from the Denoising Autoencoder approach of using pairs of clean and corrupted examples . In the generative model, can be seen as the .

The conditional distribution does not require an approximation. In the special case where , we can let This will give us

As a result, the loss function of the denoising score matching becomes

The gradient operation cancels .

WARNINGThe Denoising Score Matching has a loss function defined as

- The quantity is effectively adding noise to a clean image .

- The score function is supposed to take this noisy image and predict the noise

- Predicting noise is equivalent to denoising, because any denoised image plus the predicted noise will give us the noisy observation.

The training step can simply described as follows: You give us a training dataset , we train a network with the goal to

The last thing is that why the loss function of DSM makes sense?

WARNINGTheorem For up to a constant which is independent of the variable , it holds that

The proof is not hard.

For inference, we assume that we have already trained the score estimator s To generate an image, we perform the following procedure for

Reference

[1] Chan, Stanley H. “Tutorial on Diffusion Models for Imaging and Vision.” arXiv preprint arXiv:2403.18103 (2024).