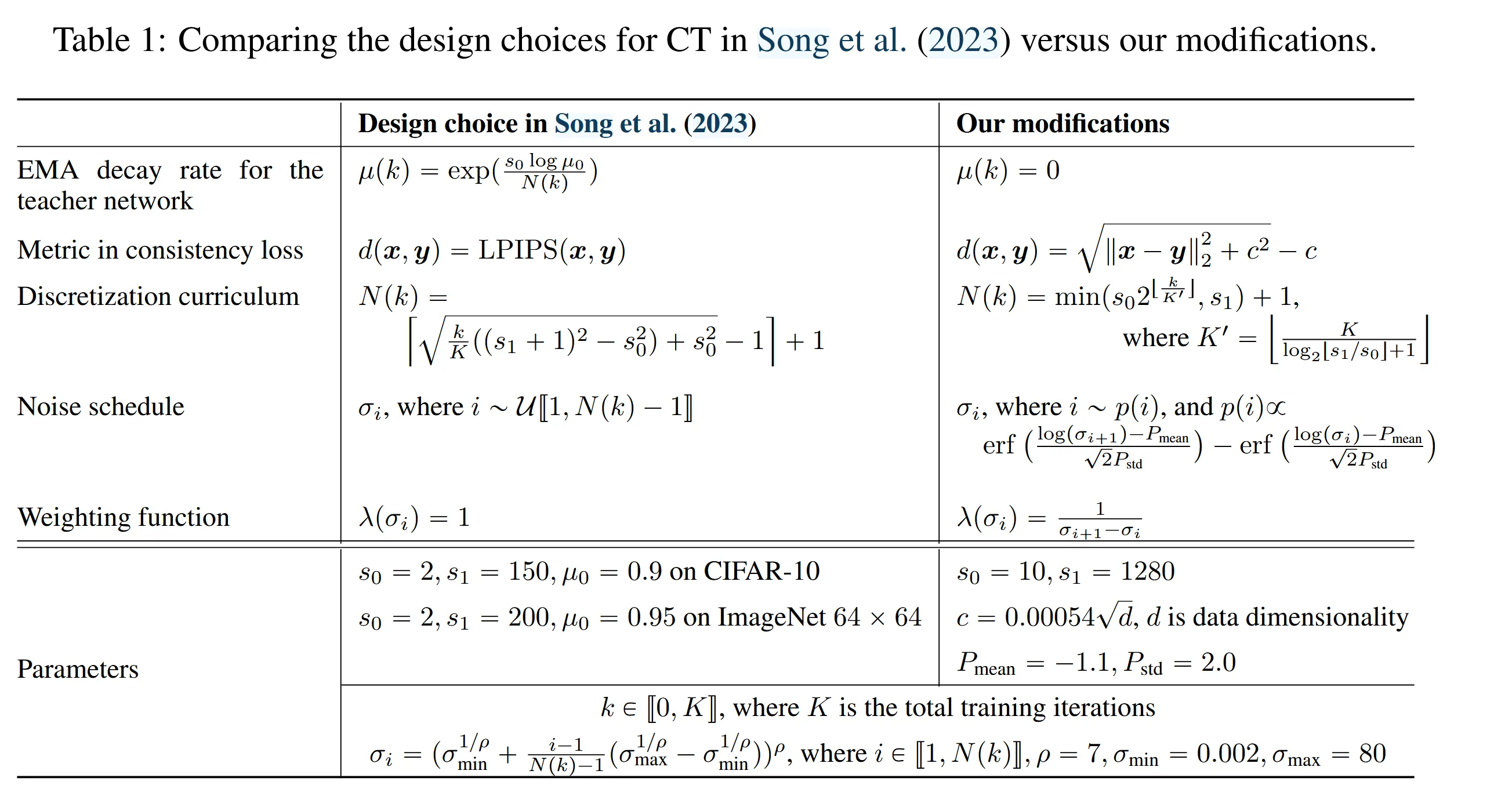

This paper focuses on consistency training. The main changes are shown in the table.

1 Weighting Function, Noise Embedding, and Dropout

The default weighting function assigns equal weights to all noise levels but is suboptimal. The paper refines this by introducing , which decreases as noise increases. This prioritizes smaller noise levels, improving sample quality in CT with the squared metric.

Song et al. (2023) use Fourier embeddings for CIFAR-10 and positional embeddings for ImageNet, balancing sensitivity to noise differences with training stability. Excessive sensitivity can cause divergence in continuous-time CT, which they address by pre-training with a diffusion model. This work shows that continuous-time CT can also converge with random initialization by reducing the Fourier scale parameter, improving stability. For discrete-time CT, reduced sensitivity slightly improves FIDs on CIFAR-10, and ImageNet models use default positional embeddings due to their comparable sensitivity to Fourier embeddings with a scale of 0.02.

Song et al. (2023) use zero dropout for consistency models, assuming single-step sampling reduces overfitting. However, higher dropout rates improve sample quality. Synchronizing dropout RNGs across student and teacher networks further stabilizes CT optimization.

With refined weighting functions, noise embeddings, and dropout, the sample quality of consistency models under the squared metric improves significantly.

2 Removing EMA for the Teacher Network

- For CT, the EMA decay rate for the teacher network should always be set to zero, although it can be non-zero for CD.

- Omitting EMA from the teacher network in CT significantly improves the sample quality of consistency models.

- Only when does the objective of CT converge to that of the CM as .

3 Pseudo-Huber Metric Functions

This paper employs the Pseudo-Huber metric family, defined as

where is an adjustable parameter. The Pseudo-Huber metric provides a smooth interpolation between the and squared norms, with controlling the width of the parabolic transition region. It is twice continuously differentiable, satisfying the theoretical requirements for CT.

The paper suggests that should scale linearly with and proposes a heuristic

where is the dimensionality of the image.

4 Improved Curriculum for Total Discretization Steps

CT’s theoretical foundation holds asymptotically as . In practice, we have to select a finite for training consistency models, potentially introducing bias into the learning process. This paper uses an exponentially increasing curriculum for the total discretization steps , doubling after a set number of training iterations. Specifically, the curriculum is described by

The sample quality of consistency models improves predictably as increases. While larger can reduce bias in CT, they might increase variance. On the contrary, smaller reduces variance at the cost of higher bias. We cap at 1281 in , which we empirically find to strike a good balance between bias and variance. In our experiments, we set and in discretization curriculums from their default values of 2 and 150 in Song et al. (2023) to and respectively.

5 Improved Noise Schedulers

Song (2023) proposes sampling a random from to select and for computing the CT objective. The noise levels are defined as:

As , the distribution of converges to:

This derivation uses the formula , where as .

The resulting distribution favors higher values of , which biases the sampling process. To address this, this paper adopt a lognormal distribution with a mean of and standard deviation of . This adjustment reduces the emphasis on high noise levels while also moderating the focus on smaller ones, which benefits learning due to the inductive bias of the consistency model’s parameterization.

For practical implementation, we discretize the lognormal distribution over as:

where and .